From Kargil’s peaks to today’s fragile LoC, this book reminds us that the echoes of 1999 still shape India’s military conscience.”

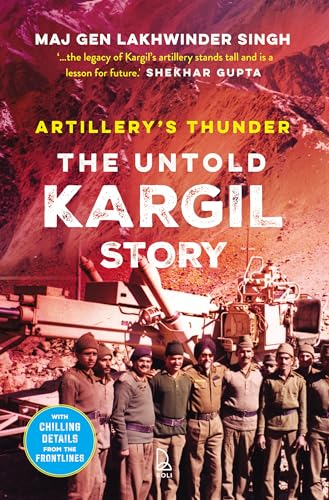

Few books pierce the surface of India’s modern military history with the clarity and courage that Artillery’s Thunder does. Written by Major General (Retd) Lakhwinder Singh, this is not another sanitized retelling of the 1999 Kargil War—it is a ground-up reconstruction of how India’s artillery turned the tide when confusion, unpreparedness, and political hesitation loomed large.

From the very first pages, Singh pulls readers into the raw tempo of Operation Vijay. He paints the early chaos with startling honesty: intelligence lapses, senior commanders underestimating a well-entrenched enemy, and a rush to attack without adequate reconnaissance. The Indian Air Force’s initial unpreparedness for high-altitude combat adds to the realism of his account, yet he’s quick to acknowledge their exceptional logistical support that kept operations alive.

Where this book truly thunders is in its portrayal of the artillery corps. Singh’s descriptions of coordinated barrages by Bofors FH-77Bs roaring day and night, precisely synchronizing with ground advances reveal how artillery became the silent architect of victory. He doesn’t hesitate to critique the post-war glorification imbalance: renaming Gun Hill to Batra Top symbolizes, in his view, how institutional bias often eclipses artillery’s contributions.

The political backdrop runs as a constant undercurrent, Singh sharply critiques the restrictions imposed for optics, such as the “no crossing LoC” rule, even as the enemy violated it. His tone remains patriotic, but it’s the patriotism of someone who has seen both the brilliance and the blunders of war up close.

Artillery’s Thunder is not merely a military memoir, it’s a mirror held up to India’s defense establishment, urging introspection and readiness. The book closes on a haunting note: that the echoes of Kargil are far from silent, especially in the light of recent events like the Pahalgam attack and Operation Sindoor.

In the end, this is both a tribute and a warning. It celebrates the men behind the guns as much as it cautions against complacency.

Highly recommended for defense enthusiasts, policy thinkers, and anyone who seeks the unfiltered truth of India’s most hard-fought modern war.